Preprocess¶

The preprocessing steps consist of merging all retrieved files into one xarray dataset and extracting the spatial and temporal average of the event of interest.

Merge¶

Here it is shown how all retrieved files are loaded into one xarray dataset, for both SEAS5 and for ERA5.

SEAS5¶

All retrieved seasonal forecasts are loaded into one xarray dataset. The amount of files retrieved depends on the temporal extent of the extreme event that is being analyzed (i.e are you looking at a monthly average or a seasonal average?). For the Siberian heatwave, we have retrieved 105 files (one for each of the 35 years and for each of the three lead times, (see Retrieve). For the UK, we are able to use more forecasts, because the target month is shorter: one month as compared to three months for the Siberian example. We retrieved 5 leadtimes x 35 = 175 files.

Each netcdf file contains 25 ensemble members, hence has the dimensions lat, lon, number (25 ensembles). Here we create an xarray dataset that also contains the dimensions time (35 years) and leadtime (5 initialization months). To generate this, we loop over lead times, and open all 35 years of the lead time and then concatenate those leadtimes.

[1]:

##This is so variables get printed within jupyter

from IPython.core.interactiveshell import InteractiveShell

InteractiveShell.ast_node_interactivity = "all"

[2]:

import os

import sys

sys.path.insert(0, os.path.abspath('../../../'))

import src.cdsretrieve as retrieve

[3]:

os.chdir(os.path.abspath('../../../'))

os.getcwd() #print the working directory

[3]:

'/lustre/soge1/projects/ls/personal/timo/UNSEEN-open'

[4]:

import xarray as xr

import numpy as np

def merge_SEAS5(folder, target_months):

init_months, leadtimes = retrieve._get_init_months(target_months)

print('Lead time: ' + "%.2i" % init_months[0])

SEAS5_ld1 = xr.open_mfdataset(

folder + '*' + "%.2i" % init_months[0] + '.nc',

combine='by_coords') # Load the first lead time

SEAS5 = SEAS5_ld1 # Create the xarray dataset to concatenate over

for init_month in init_months[1:len(init_months)]: ## Remove the first that we already have

print(init_month)

SEAS5_ld = xr.open_mfdataset(

folder + '*' + "%.2i" % init_month + '.nc',

combine='by_coords')

SEAS5 = xr.concat([SEAS5, SEAS5_ld], dim='leadtime')

SEAS5 = SEAS5.assign_coords(leadtime = np.arange(len(init_months)) + 2) # assign leadtime coordinates

return(SEAS5)

[5]:

SEAS5_Siberia = merge_SEAS5(folder='../Siberia_example/SEAS5/',

target_months=[3, 4, 5])

Lead time: 02

1

12

[6]:

SEAS5_Siberia

[6]:

- latitude: 41

- leadtime: 3

- longitude: 132

- number: 51

- time: 117

- longitude(longitude)float32-11.0 -10.0 -9.0 ... 119.0 120.0

- units :

- degrees_east

- long_name :

- longitude

array([-11., -10., -9., -8., -7., -6., -5., -4., -3., -2., -1., 0., 1., 2., 3., 4., 5., 6., 7., 8., 9., 10., 11., 12., 13., 14., 15., 16., 17., 18., 19., 20., 21., 22., 23., 24., 25., 26., 27., 28., 29., 30., 31., 32., 33., 34., 35., 36., 37., 38., 39., 40., 41., 42., 43., 44., 45., 46., 47., 48., 49., 50., 51., 52., 53., 54., 55., 56., 57., 58., 59., 60., 61., 62., 63., 64., 65., 66., 67., 68., 69., 70., 71., 72., 73., 74., 75., 76., 77., 78., 79., 80., 81., 82., 83., 84., 85., 86., 87., 88., 89., 90., 91., 92., 93., 94., 95., 96., 97., 98., 99., 100., 101., 102., 103., 104., 105., 106., 107., 108., 109., 110., 111., 112., 113., 114., 115., 116., 117., 118., 119., 120.], dtype=float32) - latitude(latitude)float3270.0 69.0 68.0 ... 32.0 31.0 30.0

- units :

- degrees_north

- long_name :

- latitude

array([70., 69., 68., 67., 66., 65., 64., 63., 62., 61., 60., 59., 58., 57., 56., 55., 54., 53., 52., 51., 50., 49., 48., 47., 46., 45., 44., 43., 42., 41., 40., 39., 38., 37., 36., 35., 34., 33., 32., 31., 30.], dtype=float32) - number(number)int640 1 2 3 4 5 6 ... 45 46 47 48 49 50

- long_name :

- ensemble_member

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - time(time)datetime64[ns]1982-03-01 ... 2020-05-01

- long_name :

- time

array(['1982-03-01T00:00:00.000000000', '1982-04-01T00:00:00.000000000', '1982-05-01T00:00:00.000000000', '1983-03-01T00:00:00.000000000', '1983-04-01T00:00:00.000000000', '1983-05-01T00:00:00.000000000', '1984-03-01T00:00:00.000000000', '1984-04-01T00:00:00.000000000', '1984-05-01T00:00:00.000000000', '1985-03-01T00:00:00.000000000', '1985-04-01T00:00:00.000000000', '1985-05-01T00:00:00.000000000', '1986-03-01T00:00:00.000000000', '1986-04-01T00:00:00.000000000', '1986-05-01T00:00:00.000000000', '1987-03-01T00:00:00.000000000', '1987-04-01T00:00:00.000000000', '1987-05-01T00:00:00.000000000', '1988-03-01T00:00:00.000000000', '1988-04-01T00:00:00.000000000', '1988-05-01T00:00:00.000000000', '1989-03-01T00:00:00.000000000', '1989-04-01T00:00:00.000000000', '1989-05-01T00:00:00.000000000', '1990-03-01T00:00:00.000000000', '1990-04-01T00:00:00.000000000', '1990-05-01T00:00:00.000000000', '1991-03-01T00:00:00.000000000', '1991-04-01T00:00:00.000000000', '1991-05-01T00:00:00.000000000', '1992-03-01T00:00:00.000000000', '1992-04-01T00:00:00.000000000', '1992-05-01T00:00:00.000000000', '1993-03-01T00:00:00.000000000', '1993-04-01T00:00:00.000000000', '1993-05-01T00:00:00.000000000', '1994-03-01T00:00:00.000000000', '1994-04-01T00:00:00.000000000', '1994-05-01T00:00:00.000000000', '1995-03-01T00:00:00.000000000', '1995-04-01T00:00:00.000000000', '1995-05-01T00:00:00.000000000', '1996-03-01T00:00:00.000000000', '1996-04-01T00:00:00.000000000', '1996-05-01T00:00:00.000000000', '1997-03-01T00:00:00.000000000', '1997-04-01T00:00:00.000000000', '1997-05-01T00:00:00.000000000', '1998-03-01T00:00:00.000000000', '1998-04-01T00:00:00.000000000', '1998-05-01T00:00:00.000000000', '1999-03-01T00:00:00.000000000', '1999-04-01T00:00:00.000000000', '1999-05-01T00:00:00.000000000', '2000-03-01T00:00:00.000000000', '2000-04-01T00:00:00.000000000', '2000-05-01T00:00:00.000000000', '2001-03-01T00:00:00.000000000', '2001-04-01T00:00:00.000000000', '2001-05-01T00:00:00.000000000', '2002-03-01T00:00:00.000000000', '2002-04-01T00:00:00.000000000', '2002-05-01T00:00:00.000000000', '2003-03-01T00:00:00.000000000', '2003-04-01T00:00:00.000000000', '2003-05-01T00:00:00.000000000', '2004-03-01T00:00:00.000000000', '2004-04-01T00:00:00.000000000', '2004-05-01T00:00:00.000000000', '2005-03-01T00:00:00.000000000', '2005-04-01T00:00:00.000000000', '2005-05-01T00:00:00.000000000', '2006-03-01T00:00:00.000000000', '2006-04-01T00:00:00.000000000', '2006-05-01T00:00:00.000000000', '2007-03-01T00:00:00.000000000', '2007-04-01T00:00:00.000000000', '2007-05-01T00:00:00.000000000', '2008-03-01T00:00:00.000000000', '2008-04-01T00:00:00.000000000', '2008-05-01T00:00:00.000000000', '2009-03-01T00:00:00.000000000', '2009-04-01T00:00:00.000000000', '2009-05-01T00:00:00.000000000', '2010-03-01T00:00:00.000000000', '2010-04-01T00:00:00.000000000', '2010-05-01T00:00:00.000000000', '2011-03-01T00:00:00.000000000', '2011-04-01T00:00:00.000000000', '2011-05-01T00:00:00.000000000', '2012-03-01T00:00:00.000000000', '2012-04-01T00:00:00.000000000', '2012-05-01T00:00:00.000000000', '2013-03-01T00:00:00.000000000', '2013-04-01T00:00:00.000000000', '2013-05-01T00:00:00.000000000', '2014-03-01T00:00:00.000000000', '2014-04-01T00:00:00.000000000', '2014-05-01T00:00:00.000000000', '2015-03-01T00:00:00.000000000', '2015-04-01T00:00:00.000000000', '2015-05-01T00:00:00.000000000', '2016-03-01T00:00:00.000000000', '2016-04-01T00:00:00.000000000', '2016-05-01T00:00:00.000000000', '2017-03-01T00:00:00.000000000', '2017-04-01T00:00:00.000000000', '2017-05-01T00:00:00.000000000', '2018-03-01T00:00:00.000000000', '2018-04-01T00:00:00.000000000', '2018-05-01T00:00:00.000000000', '2019-03-01T00:00:00.000000000', '2019-04-01T00:00:00.000000000', '2019-05-01T00:00:00.000000000', '2020-03-01T00:00:00.000000000', '2020-04-01T00:00:00.000000000', '2020-05-01T00:00:00.000000000'], dtype='datetime64[ns]') - leadtime(leadtime)int642 3 4

array([2, 3, 4])

- t2m(leadtime, time, number, latitude, longitude)float32dask.array<chunksize=(1, 3, 51, 41, 132), meta=np.ndarray>

- units :

- K

- long_name :

- 2 metre temperature

Array Chunk Bytes 387.52 MB 3.31 MB Shape (3, 117, 51, 41, 132) (1, 3, 51, 41, 132) Count 887 Tasks 117 Chunks Type float32 numpy.ndarray - d2m(leadtime, time, number, latitude, longitude)float32dask.array<chunksize=(1, 3, 51, 41, 132), meta=np.ndarray>

- units :

- K

- long_name :

- 2 metre dewpoint temperature

Array Chunk Bytes 387.52 MB 3.31 MB Shape (3, 117, 51, 41, 132) (1, 3, 51, 41, 132) Count 887 Tasks 117 Chunks Type float32 numpy.ndarray

- Conventions :

- CF-1.6

- history :

- 2020-09-08 09:33:24 GMT by grib_to_netcdf-2.16.0: /opt/ecmwf/eccodes/bin/grib_to_netcdf -S param -o /cache/data1/adaptor.mars.external-1599557575.5884402-22815-11-a3e13f38-976c-41fd-bc34-d70ac6258b8d.nc /cache/tmp/a3e13f38-976c-41fd-bc34-d70ac6258b8d-adaptor.mars.external-1599557575.5891798-22815-3-tmp.grib

You can for example select a the lat, long, time, ensemble member and lead time as follows (add .load() to see the values):

[ ]:

SEAS5_Siberia.sel(latitude=60,

longitude=-10,

time='2000-03',

number=26,

leadtime=3).load()

We can repeat this for the UK example, where just February is the target month:

[10]:

SEAS5_UK = merge_SEAS5(folder = '../UK_example/SEAS5/', target_months = [2])

Lead time: 01

12

11

10

9

The SEAS5 total precipitation rate is in m/s. You can easily convert this and change the attributes. Click on the show/hide attributes button to see the assigned attributes.

[11]:

SEAS5_UK['tprate'] = SEAS5_UK['tprate'] * 1000 * 3600 * 24 ## From m/s to mm/d

SEAS5_UK['tprate'].attrs = {'long_name': 'rainfall',

'units': 'mm/day',

'standard_name': 'thickness_of_rainfall_amount'}

SEAS5_UK

[11]:

- latitude: 11

- leadtime: 5

- longitude: 14

- number: 25

- time: 35

- time(time)datetime64[ns]1982-02-01 ... 2016-02-01

- long_name :

- time

array(['1982-02-01T00:00:00.000000000', '1983-02-01T00:00:00.000000000', '1984-02-01T00:00:00.000000000', '1985-02-01T00:00:00.000000000', '1986-02-01T00:00:00.000000000', '1987-02-01T00:00:00.000000000', '1988-02-01T00:00:00.000000000', '1989-02-01T00:00:00.000000000', '1990-02-01T00:00:00.000000000', '1991-02-01T00:00:00.000000000', '1992-02-01T00:00:00.000000000', '1993-02-01T00:00:00.000000000', '1994-02-01T00:00:00.000000000', '1995-02-01T00:00:00.000000000', '1996-02-01T00:00:00.000000000', '1997-02-01T00:00:00.000000000', '1998-02-01T00:00:00.000000000', '1999-02-01T00:00:00.000000000', '2000-02-01T00:00:00.000000000', '2001-02-01T00:00:00.000000000', '2002-02-01T00:00:00.000000000', '2003-02-01T00:00:00.000000000', '2004-02-01T00:00:00.000000000', '2005-02-01T00:00:00.000000000', '2006-02-01T00:00:00.000000000', '2007-02-01T00:00:00.000000000', '2008-02-01T00:00:00.000000000', '2009-02-01T00:00:00.000000000', '2010-02-01T00:00:00.000000000', '2011-02-01T00:00:00.000000000', '2012-02-01T00:00:00.000000000', '2013-02-01T00:00:00.000000000', '2014-02-01T00:00:00.000000000', '2015-02-01T00:00:00.000000000', '2016-02-01T00:00:00.000000000'], dtype='datetime64[ns]') - latitude(latitude)float3260.0 59.0 58.0 ... 52.0 51.0 50.0

- units :

- degrees_north

- long_name :

- latitude

array([60., 59., 58., 57., 56., 55., 54., 53., 52., 51., 50.], dtype=float32)

- number(number)int320 1 2 3 4 5 6 ... 19 20 21 22 23 24

- long_name :

- ensemble_member

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24], dtype=int32) - longitude(longitude)float32-11.0 -10.0 -9.0 ... 0.0 1.0 2.0

- units :

- degrees_east

- long_name :

- longitude

array([-11., -10., -9., -8., -7., -6., -5., -4., -3., -2., -1., 0., 1., 2.], dtype=float32) - leadtime(leadtime)int642 3 4 5 6

array([2, 3, 4, 5, 6])

- tprate(leadtime, time, number, latitude, longitude)float32dask.array<chunksize=(1, 1, 25, 11, 14), meta=np.ndarray>

- long_name :

- rainfall

- units :

- mm/day

- standard_name :

- thickness_of_rainfall_amount

Array Chunk Bytes 2.69 MB 15.40 kB Shape (5, 35, 25, 11, 14) (1, 1, 25, 11, 14) Count 1715 Tasks 175 Chunks Type float32 numpy.ndarray

- Conventions :

- CF-1.6

- history :

- 2020-05-13 14:49:43 GMT by grib_to_netcdf-2.16.0: /opt/ecmwf/eccodes/bin/grib_to_netcdf -S param -o /cache/data7/adaptor.mars.external-1589381366.1540039-11561-3-ad31a097-72e2-45ce-a565-55c62502f358.nc /cache/tmp/ad31a097-72e2-45ce-a565-55c62502f358-adaptor.mars.external-1589381366.1545565-11561-1-tmp.grib

ERA5¶

For each year a netcdf file is downloaded. They are named ERA5_yyyy, for example ERA5_1981. Therefore, we can load ERA5 by combining all downloaded years:

[9]:

ERA5_Siberia = xr.open_mfdataset('../Siberia_example/ERA5/ERA5_????.nc',combine='by_coords') ## open the data

ERA5_Siberia

[9]:

- latitude: 41

- longitude: 132

- time: 126

- longitude(longitude)float32-11.0 -10.0 -9.0 ... 119.0 120.0

- units :

- degrees_east

- long_name :

- longitude

array([-11., -10., -9., -8., -7., -6., -5., -4., -3., -2., -1., 0., 1., 2., 3., 4., 5., 6., 7., 8., 9., 10., 11., 12., 13., 14., 15., 16., 17., 18., 19., 20., 21., 22., 23., 24., 25., 26., 27., 28., 29., 30., 31., 32., 33., 34., 35., 36., 37., 38., 39., 40., 41., 42., 43., 44., 45., 46., 47., 48., 49., 50., 51., 52., 53., 54., 55., 56., 57., 58., 59., 60., 61., 62., 63., 64., 65., 66., 67., 68., 69., 70., 71., 72., 73., 74., 75., 76., 77., 78., 79., 80., 81., 82., 83., 84., 85., 86., 87., 88., 89., 90., 91., 92., 93., 94., 95., 96., 97., 98., 99., 100., 101., 102., 103., 104., 105., 106., 107., 108., 109., 110., 111., 112., 113., 114., 115., 116., 117., 118., 119., 120.], dtype=float32) - latitude(latitude)float3270.0 69.0 68.0 ... 32.0 31.0 30.0

- units :

- degrees_north

- long_name :

- latitude

array([70., 69., 68., 67., 66., 65., 64., 63., 62., 61., 60., 59., 58., 57., 56., 55., 54., 53., 52., 51., 50., 49., 48., 47., 46., 45., 44., 43., 42., 41., 40., 39., 38., 37., 36., 35., 34., 33., 32., 31., 30.], dtype=float32) - time(time)datetime64[ns]1979-03-01 ... 2020-05-01

- long_name :

- time

array(['1979-03-01T00:00:00.000000000', '1979-04-01T00:00:00.000000000', '1979-05-01T00:00:00.000000000', '1980-03-01T00:00:00.000000000', '1980-04-01T00:00:00.000000000', '1980-05-01T00:00:00.000000000', '1981-03-01T00:00:00.000000000', '1981-04-01T00:00:00.000000000', '1981-05-01T00:00:00.000000000', '1982-03-01T00:00:00.000000000', '1982-04-01T00:00:00.000000000', '1982-05-01T00:00:00.000000000', '1983-03-01T00:00:00.000000000', '1983-04-01T00:00:00.000000000', '1983-05-01T00:00:00.000000000', '1984-03-01T00:00:00.000000000', '1984-04-01T00:00:00.000000000', '1984-05-01T00:00:00.000000000', '1985-03-01T00:00:00.000000000', '1985-04-01T00:00:00.000000000', '1985-05-01T00:00:00.000000000', '1986-03-01T00:00:00.000000000', '1986-04-01T00:00:00.000000000', '1986-05-01T00:00:00.000000000', '1987-03-01T00:00:00.000000000', '1987-04-01T00:00:00.000000000', '1987-05-01T00:00:00.000000000', '1988-03-01T00:00:00.000000000', '1988-04-01T00:00:00.000000000', '1988-05-01T00:00:00.000000000', '1989-03-01T00:00:00.000000000', '1989-04-01T00:00:00.000000000', '1989-05-01T00:00:00.000000000', '1990-03-01T00:00:00.000000000', '1990-04-01T00:00:00.000000000', '1990-05-01T00:00:00.000000000', '1991-03-01T00:00:00.000000000', '1991-04-01T00:00:00.000000000', '1991-05-01T00:00:00.000000000', '1992-03-01T00:00:00.000000000', '1992-04-01T00:00:00.000000000', '1992-05-01T00:00:00.000000000', '1993-03-01T00:00:00.000000000', '1993-04-01T00:00:00.000000000', '1993-05-01T00:00:00.000000000', '1994-03-01T00:00:00.000000000', '1994-04-01T00:00:00.000000000', '1994-05-01T00:00:00.000000000', '1995-03-01T00:00:00.000000000', '1995-04-01T00:00:00.000000000', '1995-05-01T00:00:00.000000000', '1996-03-01T00:00:00.000000000', '1996-04-01T00:00:00.000000000', '1996-05-01T00:00:00.000000000', '1997-03-01T00:00:00.000000000', '1997-04-01T00:00:00.000000000', '1997-05-01T00:00:00.000000000', '1998-03-01T00:00:00.000000000', '1998-04-01T00:00:00.000000000', '1998-05-01T00:00:00.000000000', '1999-03-01T00:00:00.000000000', '1999-04-01T00:00:00.000000000', '1999-05-01T00:00:00.000000000', '2000-03-01T00:00:00.000000000', '2000-04-01T00:00:00.000000000', '2000-05-01T00:00:00.000000000', '2001-03-01T00:00:00.000000000', '2001-04-01T00:00:00.000000000', '2001-05-01T00:00:00.000000000', '2002-03-01T00:00:00.000000000', '2002-04-01T00:00:00.000000000', '2002-05-01T00:00:00.000000000', '2003-03-01T00:00:00.000000000', '2003-04-01T00:00:00.000000000', '2003-05-01T00:00:00.000000000', '2004-03-01T00:00:00.000000000', '2004-04-01T00:00:00.000000000', '2004-05-01T00:00:00.000000000', '2005-03-01T00:00:00.000000000', '2005-04-01T00:00:00.000000000', '2005-05-01T00:00:00.000000000', '2006-03-01T00:00:00.000000000', '2006-04-01T00:00:00.000000000', '2006-05-01T00:00:00.000000000', '2007-03-01T00:00:00.000000000', '2007-04-01T00:00:00.000000000', '2007-05-01T00:00:00.000000000', '2008-03-01T00:00:00.000000000', '2008-04-01T00:00:00.000000000', '2008-05-01T00:00:00.000000000', '2009-03-01T00:00:00.000000000', '2009-04-01T00:00:00.000000000', '2009-05-01T00:00:00.000000000', '2010-03-01T00:00:00.000000000', '2010-04-01T00:00:00.000000000', '2010-05-01T00:00:00.000000000', '2011-03-01T00:00:00.000000000', '2011-04-01T00:00:00.000000000', '2011-05-01T00:00:00.000000000', '2012-03-01T00:00:00.000000000', '2012-04-01T00:00:00.000000000', '2012-05-01T00:00:00.000000000', '2013-03-01T00:00:00.000000000', '2013-04-01T00:00:00.000000000', '2013-05-01T00:00:00.000000000', '2014-03-01T00:00:00.000000000', '2014-04-01T00:00:00.000000000', '2014-05-01T00:00:00.000000000', '2015-03-01T00:00:00.000000000', '2015-04-01T00:00:00.000000000', '2015-05-01T00:00:00.000000000', '2016-03-01T00:00:00.000000000', '2016-04-01T00:00:00.000000000', '2016-05-01T00:00:00.000000000', '2017-03-01T00:00:00.000000000', '2017-04-01T00:00:00.000000000', '2017-05-01T00:00:00.000000000', '2018-03-01T00:00:00.000000000', '2018-04-01T00:00:00.000000000', '2018-05-01T00:00:00.000000000', '2019-03-01T00:00:00.000000000', '2019-04-01T00:00:00.000000000', '2019-05-01T00:00:00.000000000', '2020-03-01T00:00:00.000000000', '2020-04-01T00:00:00.000000000', '2020-05-01T00:00:00.000000000'], dtype='datetime64[ns]')

- t2m(time, latitude, longitude)float32dask.array<chunksize=(3, 41, 132), meta=np.ndarray>

- units :

- K

- long_name :

- 2 metre temperature

Array Chunk Bytes 2.73 MB 64.94 kB Shape (126, 41, 132) (3, 41, 132) Count 126 Tasks 42 Chunks Type float32 numpy.ndarray - d2m(time, latitude, longitude)float32dask.array<chunksize=(3, 41, 132), meta=np.ndarray>

- units :

- K

- long_name :

- 2 metre dewpoint temperature

Array Chunk Bytes 2.73 MB 64.94 kB Shape (126, 41, 132) (3, 41, 132) Count 126 Tasks 42 Chunks Type float32 numpy.ndarray

- Conventions :

- CF-1.6

- history :

- 2020-09-08 13:26:08 GMT by grib_to_netcdf-2.16.0: /opt/ecmwf/eccodes/bin/grib_to_netcdf -S param -o /cache/data5/adaptor.mars.internal-1599571563.605006-1463-33-b77abfe4-0299-4cba-9b8c-c5a877f44943.nc /cache/tmp/b77abfe4-0299-4cba-9b8c-c5a877f44943-adaptor.mars.internal-1599571563.6055787-1463-13-tmp.grib

[13]:

ERA5_UK = xr.open_mfdataset('../UK_example/ERA5/ERA5_????.nc',combine='by_coords') ## open the data

ERA5_UK

[13]:

- latitude: 11

- longitude: 14

- time: 42

- latitude(latitude)float3260.0 59.0 58.0 ... 52.0 51.0 50.0

- units :

- degrees_north

- long_name :

- latitude

array([60., 59., 58., 57., 56., 55., 54., 53., 52., 51., 50.], dtype=float32)

- longitude(longitude)float32-11.0 -10.0 -9.0 ... 0.0 1.0 2.0

- units :

- degrees_east

- long_name :

- longitude

array([-11., -10., -9., -8., -7., -6., -5., -4., -3., -2., -1., 0., 1., 2.], dtype=float32) - time(time)datetime64[ns]1979-02-01 ... 2020-02-01

- long_name :

- time

array(['1979-02-01T00:00:00.000000000', '1980-02-01T00:00:00.000000000', '1981-02-01T00:00:00.000000000', '1982-02-01T00:00:00.000000000', '1983-02-01T00:00:00.000000000', '1984-02-01T00:00:00.000000000', '1985-02-01T00:00:00.000000000', '1986-02-01T00:00:00.000000000', '1987-02-01T00:00:00.000000000', '1988-02-01T00:00:00.000000000', '1989-02-01T00:00:00.000000000', '1990-02-01T00:00:00.000000000', '1991-02-01T00:00:00.000000000', '1992-02-01T00:00:00.000000000', '1993-02-01T00:00:00.000000000', '1994-02-01T00:00:00.000000000', '1995-02-01T00:00:00.000000000', '1996-02-01T00:00:00.000000000', '1997-02-01T00:00:00.000000000', '1998-02-01T00:00:00.000000000', '1999-02-01T00:00:00.000000000', '2000-02-01T00:00:00.000000000', '2001-02-01T00:00:00.000000000', '2002-02-01T00:00:00.000000000', '2003-02-01T00:00:00.000000000', '2004-02-01T00:00:00.000000000', '2005-02-01T00:00:00.000000000', '2006-02-01T00:00:00.000000000', '2007-02-01T00:00:00.000000000', '2008-02-01T00:00:00.000000000', '2009-02-01T00:00:00.000000000', '2010-02-01T00:00:00.000000000', '2011-02-01T00:00:00.000000000', '2012-02-01T00:00:00.000000000', '2013-02-01T00:00:00.000000000', '2014-02-01T00:00:00.000000000', '2015-02-01T00:00:00.000000000', '2016-02-01T00:00:00.000000000', '2017-02-01T00:00:00.000000000', '2018-02-01T00:00:00.000000000', '2019-02-01T00:00:00.000000000', '2020-02-01T00:00:00.000000000'], dtype='datetime64[ns]')

- tp(time, latitude, longitude)float32dask.array<chunksize=(1, 11, 14), meta=np.ndarray>

- units :

- m

- long_name :

- Total precipitation

Array Chunk Bytes 25.87 kB 616 B Shape (42, 11, 14) (1, 11, 14) Count 126 Tasks 42 Chunks Type float32 numpy.ndarray

- Conventions :

- CF-1.6

- history :

- 2020-09-08 13:36:54 GMT by grib_to_netcdf-2.16.0: /opt/ecmwf/eccodes/bin/grib_to_netcdf -S param -o /cache/data6/adaptor.mars.internal-1599572211.936053-12058-17-60c5a89d-0507-4593-8870-aacff3b72426.nc /cache/tmp/60c5a89d-0507-4593-8870-aacff3b72426-adaptor.mars.internal-1599572211.9366653-12058-6-tmp.grib

Event definition¶

Time selection¶

For the UK, the event of interest is UK February average precipitation. Since we download monthly averages, we do not have to do any preprocessing along the time dimension here. For the Siberian heatwave, we are interested in the March-May average. Therefore we need to take the seasonal average of the monthly timeseries. We cannot take the simple mean of the three months, because they have a different number of days in the months, see this example. Therefore we take a weighted average:

[11]:

month_length = SEAS5_Siberia.time.dt.days_in_month

month_length

[11]:

- time: 117

- 31 30 31 31 30 31 31 30 31 31 30 ... 30 31 31 30 31 31 30 31 31 30 31

array([31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31, 31, 30, 31]) - time(time)datetime64[ns]1982-03-01 ... 2020-05-01

- long_name :

- time

array(['1982-03-01T00:00:00.000000000', '1982-04-01T00:00:00.000000000', '1982-05-01T00:00:00.000000000', '1983-03-01T00:00:00.000000000', '1983-04-01T00:00:00.000000000', '1983-05-01T00:00:00.000000000', '1984-03-01T00:00:00.000000000', '1984-04-01T00:00:00.000000000', '1984-05-01T00:00:00.000000000', '1985-03-01T00:00:00.000000000', '1985-04-01T00:00:00.000000000', '1985-05-01T00:00:00.000000000', '1986-03-01T00:00:00.000000000', '1986-04-01T00:00:00.000000000', '1986-05-01T00:00:00.000000000', '1987-03-01T00:00:00.000000000', '1987-04-01T00:00:00.000000000', '1987-05-01T00:00:00.000000000', '1988-03-01T00:00:00.000000000', '1988-04-01T00:00:00.000000000', '1988-05-01T00:00:00.000000000', '1989-03-01T00:00:00.000000000', '1989-04-01T00:00:00.000000000', '1989-05-01T00:00:00.000000000', '1990-03-01T00:00:00.000000000', '1990-04-01T00:00:00.000000000', '1990-05-01T00:00:00.000000000', '1991-03-01T00:00:00.000000000', '1991-04-01T00:00:00.000000000', '1991-05-01T00:00:00.000000000', '1992-03-01T00:00:00.000000000', '1992-04-01T00:00:00.000000000', '1992-05-01T00:00:00.000000000', '1993-03-01T00:00:00.000000000', '1993-04-01T00:00:00.000000000', '1993-05-01T00:00:00.000000000', '1994-03-01T00:00:00.000000000', '1994-04-01T00:00:00.000000000', '1994-05-01T00:00:00.000000000', '1995-03-01T00:00:00.000000000', '1995-04-01T00:00:00.000000000', '1995-05-01T00:00:00.000000000', '1996-03-01T00:00:00.000000000', '1996-04-01T00:00:00.000000000', '1996-05-01T00:00:00.000000000', '1997-03-01T00:00:00.000000000', '1997-04-01T00:00:00.000000000', '1997-05-01T00:00:00.000000000', '1998-03-01T00:00:00.000000000', '1998-04-01T00:00:00.000000000', '1998-05-01T00:00:00.000000000', '1999-03-01T00:00:00.000000000', '1999-04-01T00:00:00.000000000', '1999-05-01T00:00:00.000000000', '2000-03-01T00:00:00.000000000', '2000-04-01T00:00:00.000000000', '2000-05-01T00:00:00.000000000', '2001-03-01T00:00:00.000000000', '2001-04-01T00:00:00.000000000', '2001-05-01T00:00:00.000000000', '2002-03-01T00:00:00.000000000', '2002-04-01T00:00:00.000000000', '2002-05-01T00:00:00.000000000', '2003-03-01T00:00:00.000000000', '2003-04-01T00:00:00.000000000', '2003-05-01T00:00:00.000000000', '2004-03-01T00:00:00.000000000', '2004-04-01T00:00:00.000000000', '2004-05-01T00:00:00.000000000', '2005-03-01T00:00:00.000000000', '2005-04-01T00:00:00.000000000', '2005-05-01T00:00:00.000000000', '2006-03-01T00:00:00.000000000', '2006-04-01T00:00:00.000000000', '2006-05-01T00:00:00.000000000', '2007-03-01T00:00:00.000000000', '2007-04-01T00:00:00.000000000', '2007-05-01T00:00:00.000000000', '2008-03-01T00:00:00.000000000', '2008-04-01T00:00:00.000000000', '2008-05-01T00:00:00.000000000', '2009-03-01T00:00:00.000000000', '2009-04-01T00:00:00.000000000', '2009-05-01T00:00:00.000000000', '2010-03-01T00:00:00.000000000', '2010-04-01T00:00:00.000000000', '2010-05-01T00:00:00.000000000', '2011-03-01T00:00:00.000000000', '2011-04-01T00:00:00.000000000', '2011-05-01T00:00:00.000000000', '2012-03-01T00:00:00.000000000', '2012-04-01T00:00:00.000000000', '2012-05-01T00:00:00.000000000', '2013-03-01T00:00:00.000000000', '2013-04-01T00:00:00.000000000', '2013-05-01T00:00:00.000000000', '2014-03-01T00:00:00.000000000', '2014-04-01T00:00:00.000000000', '2014-05-01T00:00:00.000000000', '2015-03-01T00:00:00.000000000', '2015-04-01T00:00:00.000000000', '2015-05-01T00:00:00.000000000', '2016-03-01T00:00:00.000000000', '2016-04-01T00:00:00.000000000', '2016-05-01T00:00:00.000000000', '2017-03-01T00:00:00.000000000', '2017-04-01T00:00:00.000000000', '2017-05-01T00:00:00.000000000', '2018-03-01T00:00:00.000000000', '2018-04-01T00:00:00.000000000', '2018-05-01T00:00:00.000000000', '2019-03-01T00:00:00.000000000', '2019-04-01T00:00:00.000000000', '2019-05-01T00:00:00.000000000', '2020-03-01T00:00:00.000000000', '2020-04-01T00:00:00.000000000', '2020-05-01T00:00:00.000000000'], dtype='datetime64[ns]')

[12]:

# Calculate the weights by grouping by 'time.season'.

weights = month_length.groupby('time.year') / month_length.groupby('time.year').sum()

weights

[12]:

- time: 117

- 0.337 0.3261 0.337 0.337 0.3261 ... 0.3261 0.337 0.337 0.3261 0.337

array([0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652, 0.33695652, 0.32608696, 0.33695652]) - time(time)datetime64[ns]1982-03-01 ... 2020-05-01

- long_name :

- time

array(['1982-03-01T00:00:00.000000000', '1982-04-01T00:00:00.000000000', '1982-05-01T00:00:00.000000000', '1983-03-01T00:00:00.000000000', '1983-04-01T00:00:00.000000000', '1983-05-01T00:00:00.000000000', '1984-03-01T00:00:00.000000000', '1984-04-01T00:00:00.000000000', '1984-05-01T00:00:00.000000000', '1985-03-01T00:00:00.000000000', '1985-04-01T00:00:00.000000000', '1985-05-01T00:00:00.000000000', '1986-03-01T00:00:00.000000000', '1986-04-01T00:00:00.000000000', '1986-05-01T00:00:00.000000000', '1987-03-01T00:00:00.000000000', '1987-04-01T00:00:00.000000000', '1987-05-01T00:00:00.000000000', '1988-03-01T00:00:00.000000000', '1988-04-01T00:00:00.000000000', '1988-05-01T00:00:00.000000000', '1989-03-01T00:00:00.000000000', '1989-04-01T00:00:00.000000000', '1989-05-01T00:00:00.000000000', '1990-03-01T00:00:00.000000000', '1990-04-01T00:00:00.000000000', '1990-05-01T00:00:00.000000000', '1991-03-01T00:00:00.000000000', '1991-04-01T00:00:00.000000000', '1991-05-01T00:00:00.000000000', '1992-03-01T00:00:00.000000000', '1992-04-01T00:00:00.000000000', '1992-05-01T00:00:00.000000000', '1993-03-01T00:00:00.000000000', '1993-04-01T00:00:00.000000000', '1993-05-01T00:00:00.000000000', '1994-03-01T00:00:00.000000000', '1994-04-01T00:00:00.000000000', '1994-05-01T00:00:00.000000000', '1995-03-01T00:00:00.000000000', '1995-04-01T00:00:00.000000000', '1995-05-01T00:00:00.000000000', '1996-03-01T00:00:00.000000000', '1996-04-01T00:00:00.000000000', '1996-05-01T00:00:00.000000000', '1997-03-01T00:00:00.000000000', '1997-04-01T00:00:00.000000000', '1997-05-01T00:00:00.000000000', '1998-03-01T00:00:00.000000000', '1998-04-01T00:00:00.000000000', '1998-05-01T00:00:00.000000000', '1999-03-01T00:00:00.000000000', '1999-04-01T00:00:00.000000000', '1999-05-01T00:00:00.000000000', '2000-03-01T00:00:00.000000000', '2000-04-01T00:00:00.000000000', '2000-05-01T00:00:00.000000000', '2001-03-01T00:00:00.000000000', '2001-04-01T00:00:00.000000000', '2001-05-01T00:00:00.000000000', '2002-03-01T00:00:00.000000000', '2002-04-01T00:00:00.000000000', '2002-05-01T00:00:00.000000000', '2003-03-01T00:00:00.000000000', '2003-04-01T00:00:00.000000000', '2003-05-01T00:00:00.000000000', '2004-03-01T00:00:00.000000000', '2004-04-01T00:00:00.000000000', '2004-05-01T00:00:00.000000000', '2005-03-01T00:00:00.000000000', '2005-04-01T00:00:00.000000000', '2005-05-01T00:00:00.000000000', '2006-03-01T00:00:00.000000000', '2006-04-01T00:00:00.000000000', '2006-05-01T00:00:00.000000000', '2007-03-01T00:00:00.000000000', '2007-04-01T00:00:00.000000000', '2007-05-01T00:00:00.000000000', '2008-03-01T00:00:00.000000000', '2008-04-01T00:00:00.000000000', '2008-05-01T00:00:00.000000000', '2009-03-01T00:00:00.000000000', '2009-04-01T00:00:00.000000000', '2009-05-01T00:00:00.000000000', '2010-03-01T00:00:00.000000000', '2010-04-01T00:00:00.000000000', '2010-05-01T00:00:00.000000000', '2011-03-01T00:00:00.000000000', '2011-04-01T00:00:00.000000000', '2011-05-01T00:00:00.000000000', '2012-03-01T00:00:00.000000000', '2012-04-01T00:00:00.000000000', '2012-05-01T00:00:00.000000000', '2013-03-01T00:00:00.000000000', '2013-04-01T00:00:00.000000000', '2013-05-01T00:00:00.000000000', '2014-03-01T00:00:00.000000000', '2014-04-01T00:00:00.000000000', '2014-05-01T00:00:00.000000000', '2015-03-01T00:00:00.000000000', '2015-04-01T00:00:00.000000000', '2015-05-01T00:00:00.000000000', '2016-03-01T00:00:00.000000000', '2016-04-01T00:00:00.000000000', '2016-05-01T00:00:00.000000000', '2017-03-01T00:00:00.000000000', '2017-04-01T00:00:00.000000000', '2017-05-01T00:00:00.000000000', '2018-03-01T00:00:00.000000000', '2018-04-01T00:00:00.000000000', '2018-05-01T00:00:00.000000000', '2019-03-01T00:00:00.000000000', '2019-04-01T00:00:00.000000000', '2019-05-01T00:00:00.000000000', '2020-03-01T00:00:00.000000000', '2020-04-01T00:00:00.000000000', '2020-05-01T00:00:00.000000000'], dtype='datetime64[ns]') - year(time)int641982 1982 1982 ... 2020 2020 2020

array([1982, 1982, 1982, 1983, 1983, 1983, 1984, 1984, 1984, 1985, 1985, 1985, 1986, 1986, 1986, 1987, 1987, 1987, 1988, 1988, 1988, 1989, 1989, 1989, 1990, 1990, 1990, 1991, 1991, 1991, 1992, 1992, 1992, 1993, 1993, 1993, 1994, 1994, 1994, 1995, 1995, 1995, 1996, 1996, 1996, 1997, 1997, 1997, 1998, 1998, 1998, 1999, 1999, 1999, 2000, 2000, 2000, 2001, 2001, 2001, 2002, 2002, 2002, 2003, 2003, 2003, 2004, 2004, 2004, 2005, 2005, 2005, 2006, 2006, 2006, 2007, 2007, 2007, 2008, 2008, 2008, 2009, 2009, 2009, 2010, 2010, 2010, 2011, 2011, 2011, 2012, 2012, 2012, 2013, 2013, 2013, 2014, 2014, 2014, 2015, 2015, 2015, 2016, 2016, 2016, 2017, 2017, 2017, 2018, 2018, 2018, 2019, 2019, 2019, 2020, 2020, 2020])

[13]:

# Test that the sum of the weights for the season is 1.0

np.testing.assert_allclose(weights.groupby('time.year').sum().values, np.ones(39)) ## the weight is one for each year

[14]:

# Calculate the weighted average

SEAS5_Siberia_weighted = (SEAS5_Siberia * weights).groupby('time.year').sum(dim='time', min_count = 3)

SEAS5_Siberia_weighted

[14]:

- latitude: 41

- leadtime: 3

- longitude: 132

- number: 51

- year: 39

- longitude(longitude)float32-11.0 -10.0 -9.0 ... 119.0 120.0

- units :

- degrees_east

- long_name :

- longitude

array([-11., -10., -9., -8., -7., -6., -5., -4., -3., -2., -1., 0., 1., 2., 3., 4., 5., 6., 7., 8., 9., 10., 11., 12., 13., 14., 15., 16., 17., 18., 19., 20., 21., 22., 23., 24., 25., 26., 27., 28., 29., 30., 31., 32., 33., 34., 35., 36., 37., 38., 39., 40., 41., 42., 43., 44., 45., 46., 47., 48., 49., 50., 51., 52., 53., 54., 55., 56., 57., 58., 59., 60., 61., 62., 63., 64., 65., 66., 67., 68., 69., 70., 71., 72., 73., 74., 75., 76., 77., 78., 79., 80., 81., 82., 83., 84., 85., 86., 87., 88., 89., 90., 91., 92., 93., 94., 95., 96., 97., 98., 99., 100., 101., 102., 103., 104., 105., 106., 107., 108., 109., 110., 111., 112., 113., 114., 115., 116., 117., 118., 119., 120.], dtype=float32) - leadtime(leadtime)int642 3 4

array([2, 3, 4])

- latitude(latitude)float3270.0 69.0 68.0 ... 32.0 31.0 30.0

- units :

- degrees_north

- long_name :

- latitude

array([70., 69., 68., 67., 66., 65., 64., 63., 62., 61., 60., 59., 58., 57., 56., 55., 54., 53., 52., 51., 50., 49., 48., 47., 46., 45., 44., 43., 42., 41., 40., 39., 38., 37., 36., 35., 34., 33., 32., 31., 30.], dtype=float32) - number(number)int640 1 2 3 4 5 6 ... 45 46 47 48 49 50

- long_name :

- ensemble_member

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - year(year)int641982 1983 1984 ... 2018 2019 2020

array([1982, 1983, 1984, 1985, 1986, 1987, 1988, 1989, 1990, 1991, 1992, 1993, 1994, 1995, 1996, 1997, 1998, 1999, 2000, 2001, 2002, 2003, 2004, 2005, 2006, 2007, 2008, 2009, 2010, 2011, 2012, 2013, 2014, 2015, 2016, 2017, 2018, 2019, 2020])

- t2m(year, leadtime, number, latitude, longitude)float64dask.array<chunksize=(1, 1, 51, 41, 132), meta=np.ndarray>

Array Chunk Bytes 258.35 MB 2.21 MB Shape (39, 3, 51, 41, 132) (1, 1, 51, 41, 132) Count 2550 Tasks 117 Chunks Type float64 numpy.ndarray - d2m(year, leadtime, number, latitude, longitude)float64dask.array<chunksize=(1, 1, 51, 41, 132), meta=np.ndarray>

Array Chunk Bytes 258.35 MB 2.21 MB Shape (39, 3, 51, 41, 132) (1, 1, 51, 41, 132) Count 2550 Tasks 117 Chunks Type float64 numpy.ndarray

Or as function:

[15]:

def season_mean(ds, years, calendar='standard'):

# Make a DataArray with the number of days in each month, size = len(time)

month_length = ds.time.dt.days_in_month

# Calculate the weights by grouping by 'time.season'

weights = month_length.groupby('time.year') / month_length.groupby('time.year').sum()

# Test that the sum of the weights for each season is 1.0

np.testing.assert_allclose(weights.groupby('time.year').sum().values, np.ones(years))

# Calculate the weighted average

return (ds * weights).groupby('time.year').sum(dim='time', min_count = 3)

[16]:

ERA5_Siberia_weighted = season_mean(ERA5_Siberia, years = 42)

ERA5_Siberia_weighted

[16]:

- latitude: 41

- longitude: 132

- year: 42

- longitude(longitude)float32-11.0 -10.0 -9.0 ... 119.0 120.0

- units :

- degrees_east

- long_name :

- longitude

array([-11., -10., -9., -8., -7., -6., -5., -4., -3., -2., -1., 0., 1., 2., 3., 4., 5., 6., 7., 8., 9., 10., 11., 12., 13., 14., 15., 16., 17., 18., 19., 20., 21., 22., 23., 24., 25., 26., 27., 28., 29., 30., 31., 32., 33., 34., 35., 36., 37., 38., 39., 40., 41., 42., 43., 44., 45., 46., 47., 48., 49., 50., 51., 52., 53., 54., 55., 56., 57., 58., 59., 60., 61., 62., 63., 64., 65., 66., 67., 68., 69., 70., 71., 72., 73., 74., 75., 76., 77., 78., 79., 80., 81., 82., 83., 84., 85., 86., 87., 88., 89., 90., 91., 92., 93., 94., 95., 96., 97., 98., 99., 100., 101., 102., 103., 104., 105., 106., 107., 108., 109., 110., 111., 112., 113., 114., 115., 116., 117., 118., 119., 120.], dtype=float32) - latitude(latitude)float3270.0 69.0 68.0 ... 32.0 31.0 30.0

- units :

- degrees_north

- long_name :

- latitude

array([70., 69., 68., 67., 66., 65., 64., 63., 62., 61., 60., 59., 58., 57., 56., 55., 54., 53., 52., 51., 50., 49., 48., 47., 46., 45., 44., 43., 42., 41., 40., 39., 38., 37., 36., 35., 34., 33., 32., 31., 30.], dtype=float32) - year(year)int641979 1980 1981 ... 2018 2019 2020

array([1979, 1980, 1981, 1982, 1983, 1984, 1985, 1986, 1987, 1988, 1989, 1990, 1991, 1992, 1993, 1994, 1995, 1996, 1997, 1998, 1999, 2000, 2001, 2002, 2003, 2004, 2005, 2006, 2007, 2008, 2009, 2010, 2011, 2012, 2013, 2014, 2015, 2016, 2017, 2018, 2019, 2020])

- t2m(year, latitude, longitude)float64dask.array<chunksize=(1, 41, 132), meta=np.ndarray>

Array Chunk Bytes 1.82 MB 43.30 kB Shape (42, 41, 132) (1, 41, 132) Count 547 Tasks 42 Chunks Type float64 numpy.ndarray - d2m(year, latitude, longitude)float64dask.array<chunksize=(1, 41, 132), meta=np.ndarray>

Array Chunk Bytes 1.82 MB 43.30 kB Shape (42, 41, 132) (1, 41, 132) Count 547 Tasks 42 Chunks Type float64 numpy.ndarray

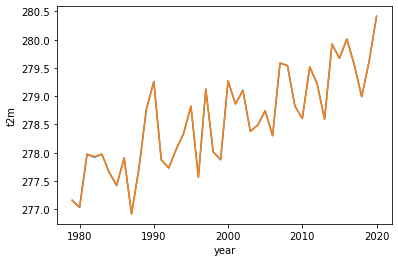

What is the difference between the mean and weighted mean?

Barely visible the difference

[17]:

ERA5_Siberia_weighted['t2m'].mean(['longitude', 'latitude']).plot()

ERA5_Siberia['t2m'].groupby('time.year').mean().mean(['longitude','latitude']).plot()

[17]:

[<matplotlib.lines.Line2D at 0x7fa13055f970>]

[17]:

[<matplotlib.lines.Line2D at 0x7fa130531be0>]

Spatial selection¶

What spatial extent defines the event you are analyzing? The easiest option is to select a lat-lon box, like we did for the Siberian heatwave example (i.e we average the temperature over 50-70N, 65-120E, also used here).

In case you want to specify another domain than a lat-lon box, you could mask the datasets. For the California Fires example, we select the domain with high temperature anomalies (>2 standard deviation), see California_august_temperature_anomaly. For the UK example, we want a country-averaged timeseries instead of a box. In this case, we use another observational product: the EOBS dataset that covers Europe. We upscale this dataset to the same resolution as SEAS5 and create a mask to take the spatial average over the UK, see Using EOBS + upscaling.

We have to take the latitude-weighted average, since since grid cell area decreases with latitude. We first take the ‘normal’ average:

[36]:

ERA5_Siberia_events_zoomed = (

ERA5_Siberia_weighted['t2m'].sel( # Select 2 metre temperature

latitude=slice(70, 50), # Select the latitudes

longitude=slice(65, 120)). # Select the longitude

mean(['longitude', 'latitude']))

And we repeat this for the SEAS5 events

[37]:

SEAS5_Siberia_events = (

SEAS5_Siberia_weighted['t2m'].sel(

latitude=slice(70, 30),

longitude=slice(-11, 120)).

mean(['longitude', 'latitude']))

SEAS5_Siberia_events.load()

/soge-home/users/cenv0732/.conda/envs/UNSEEN-open/lib/python3.8/site-packages/xarray/core/nanops.py:142: RuntimeWarning: Mean of empty slice

return np.nanmean(a, axis=axis, dtype=dtype)

[37]:

- year: 39

- leadtime: 3

- number: 51

- 277.7 277.1 277.1 276.4 277.6 277.0 ... 277.9 277.8 279.0 278.8 278.2

array([[[277.70246026, 277.08011538, 277.05805243, ..., nan, nan, nan], [276.54063304, 277.63527276, 276.53540684, ..., nan, nan, nan], [276.94382457, 277.22540106, 277.25375804, ..., nan, nan, nan]], [[276.68638666, 276.64418409, 276.88169219, ..., nan, nan, nan], [277.06362955, 277.30470221, 276.49967939, ..., nan, nan, nan], [276.37166345, 276.63563118, 277.05456392, ..., nan, nan, nan]], [[277.53103277, 277.49691758, 278.32115366, ..., nan, nan, nan], [277.53911427, 278.11393678, 277.66278741, ..., nan, nan, nan], [278.24589375, 277.71341079, 277.3067712 , ..., nan, nan, nan]], ..., [[278.78906418, 278.0626699 , 278.09438675, ..., 278.10947691, 278.27620377, 278.18620179], [278.65929139, 277.33954004, 278.65951576, ..., 278.22959696, 278.32163068, 278.94724957], [278.90689211, 277.9030209 , 279.13818072, ..., 278.76767259, 279.13397914, 277.76992423]], [[278.6218426 , 277.95006232, 278.22900254, ..., 277.56330007, 277.99480916, 277.66857676], [278.39808792, 277.65889255, 277.92266928, ..., 278.39390445, 277.90353039, 278.01793147], [278.63429219, 278.11630486, 278.58465727, ..., 277.7081865 , 277.73949614, 278.65482764]], [[279.28124227, 278.53474142, 278.47436518, ..., 278.93209185, 278.11716261, 279.3904762 ], [277.77935773, 279.15571385, 279.02168652, ..., 279.25803913, 278.8991169 , 278.72803803], [278.54721722, 278.25816177, 279.65502139, ..., 279.01242149, 278.80174459, 278.23615329]]]) - leadtime(leadtime)int642 3 4

array([2, 3, 4])

- number(number)int640 1 2 3 4 5 6 ... 45 46 47 48 49 50

- long_name :

- ensemble_member

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - year(year)int641982 1983 1984 ... 2018 2019 2020

array([1982, 1983, 1984, 1985, 1986, 1987, 1988, 1989, 1990, 1991, 1992, 1993, 1994, 1995, 1996, 1997, 1998, 1999, 2000, 2001, 2002, 2003, 2004, 2005, 2006, 2007, 2008, 2009, 2010, 2011, 2012, 2013, 2014, 2015, 2016, 2017, 2018, 2019, 2020])

[39]:

SEAS5_Siberia_events_zoomed = (

SEAS5_Siberia_weighted['t2m'].sel(

latitude=slice(70, 50),

longitude=slice(65, 120)).

mean(['longitude', 'latitude']))

SEAS5_Siberia_events_zoomed.load()

[39]:

- year: 39

- leadtime: 3

- number: 51

- 269.4 267.5 268.9 266.8 269.6 267.9 ... 268.2 270.0 270.0 269.8 267.2

array([[[269.41349502, 267.46724112, 268.92858923, ..., nan, nan, nan], [267.30541714, 269.45786213, 267.88083134, ..., nan, nan, nan], [266.70463988, 269.36559057, 267.85380896, ..., nan, nan, nan]], [[267.65255078, 267.85459971, 267.93397041, ..., nan, nan, nan], [267.86908721, 269.16875401, 266.25505375, ..., nan, nan, nan], [267.37705396, 268.0216673 , 269.216725 , ..., nan, nan, nan]], [[269.11559244, 270.30993792, 268.97992022, ..., nan, nan, nan], [268.42632866, 270.23730451, 268.14872887, ..., nan, nan, nan], [269.91675564, 269.37623754, 268.62430776, ..., nan, nan, nan]], ..., [[270.1395106 , 269.2763681 , 269.63408345, ..., 267.98167445, 270.99148119, 269.2334804 ], [269.47838693, 267.62436995, 270.46814617, ..., 269.60061317, 269.2030878 , 270.34174847], [271.39762301, 268.06102893, 271.27933216, ..., 269.56866515, 270.64943148, 266.36754614]], [[269.32829344, 268.54758963, 269.10385302, ..., 268.03762412, 269.16690743, 268.41760566], [269.09579833, 268.27844902, 268.80430384, ..., 269.38437353, 269.58112924, 269.15390309], [269.2755198 , 269.28402279, 270.34429505, ..., 268.05044769, 269.01128231, 270.09822017]], [[271.35447849, 269.73507649, 269.16852164, ..., 270.77523033, 268.30803885, 271.87237937], [269.33923167, 270.81122764, 269.97102764, ..., 272.56708782, 270.69195734, 269.90622653], [270.37695877, 269.72247595, 272.524012 , ..., 270.04026793, 269.81585811, 267.24061429]]]) - leadtime(leadtime)int642 3 4

array([2, 3, 4])

- number(number)int640 1 2 3 4 5 6 ... 45 46 47 48 49 50

- long_name :

- ensemble_member

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - year(year)int641982 1983 1984 ... 2018 2019 2020

array([1982, 1983, 1984, 1985, 1986, 1987, 1988, 1989, 1990, 1991, 1992, 1993, 1994, 1995, 1996, 1997, 1998, 1999, 2000, 2001, 2002, 2003, 2004, 2005, 2006, 2007, 2008, 2009, 2010, 2011, 2012, 2013, 2014, 2015, 2016, 2017, 2018, 2019, 2020])

[40]:

SEAS5_Siberia_events.to_dataframe().to_csv('Data/SEAS5_Siberia_events.csv')

ERA5_Siberia_events.to_dataframe().to_csv('Data/ERA5_Siberia_events.csv')

[41]:

SEAS5_Siberia_events_zoomed.to_dataframe().to_csv('Data/SEAS5_Siberia_events_zoomed.csv')

ERA5_Siberia_events_zoomed.to_dataframe().to_csv('Data/ERA5_Siberia_events_zoomed.csv')